How to Use FX700

PRIMEHPC FX700 is the latest supercomputer equipped with the same CPU as Fugaku. ACCMS has an FX700 in a small configuration and provides it as an evaluation environment for users of the supercomputer system.

Please apply for the Application Form for Collaborative Research Project for FX700 "Trial Use" and "Small Node Use" to use this service. The trial use allows you to use the FX700 for one month without any screening. There is no cost burden for use.

The job scheduler has been changed from PBS to Slurm from April 2023.

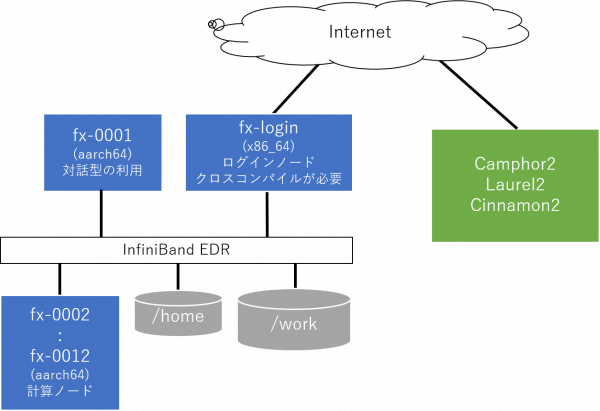

It is a configuration of a small PC cluster type connected to a network independent of Camphor3 and Laurel3. The login node is a server on an Intel Xeon processor (x86_64 architecture), so cross-compilation is required to run the program on A64FX processor (aarch64 architecture) of the computing node. For convenience, the configuration allows interactive native compilation even on a computing node fx-0001.

Computing Node CPU Specifications

| Item | Description |

|---|---|

| Name | A64FX |

| Command | Set |

| Architecture | Armv8.2-A SVE |

| Number of computing cores | 48 cores |

| Clock | 1.8 GHz |

| Theoretical computing performance | 2.7648 TFLOPS |

Computing node specifications

| Item | Description |

|---|---|

| Architecture | 1 CPU/node |

| Memory capacity | 32 GiB(HBM2, 4 stack) |

| Memory Bandwidths | 1,024 GB/s |

| Interconnects | InfiniBand EDR |

| Internal storage | M.2 SSD Type 2280 slot(NVMe) |

| OS | Red Hat Enterprise Linux 8 |

PRIMEHPC FX700 Product Information

To log in to the FX700, connect to the following hosts via SSH public key authentication

fx-login.kudpc.kyoto-u.ac.jp- You can log in with the key you have already registered in the user portal of the supercomputer. (Keys added directly to authorized_keys in the Camphor3 and Laurel3 home directories will not be available.)

- Inside the cluster, mutual login is possible with host-based authentication.

/home and /work are configured as shared storage. Since /home is a local storage of fx-login, the response to operations on the login node is fast but it is not strong enough for the load. Please use /work to perform calculations.

[root@fx-0001 ~]# df -h /home/ /work/

Filesystem Size Used Avail Use% Mounted on

fx-login-ib:/home 399G 27G 373G 7% /home

armst-ib:/work 7.0T 25G 7.0T 1% /work- /home mounts fx-login:/home. 20GB capacity limit per user.

- /work mounts armst:/work. Please use this for calculations. 500GB capacity limit per user.

- /home and /work are mounted each time they are accessed by autofs.

- If /work capacity is insufficient, please contact us.

- If the file server is not responding, please notify the administrator. We will restart the file server side.

- Files will be deleted after the end of the service, please make your own backups during the period of use.

Available compilers are Fujitsu compiler and GCC.

Commands for cross-compilation in fx-login

# For fortran

[b12345@fx-login ~]$ frtpx -v

frtpx: Fujitsu Fortran Compiler 4.4.0

How to Use: frtpx [Option] File

[b12345@fx-login ~]$ mpifrtpx -v

frtpx: Fujitsu Fortran Compiler 4.4.0

How to Use: frtpx [Option] File

# For C Language

[b12345@fx-login ~]$ fccpx -v

fccpx: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

[b12345@fx-login ~]$ mpifccpx -v

fccpx: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: fccpx [Option] File

# For C++ Language

[b12345@fx-login ~]$ FCCpx -v

FCCpx: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: FCCpx [Option] File

[b12345@fx-login ~]$ mpiFCCpx -v

FCCpx: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: FCCpx [Option] FileCommands for native compilation in fx-0001

# For fortran

[b12345@fx-0001 ~]$ frt -v

frt: Fujitsu Fortran Compiler 4.4.0

How to Use: frt [Option] File

[b12345@fx-0001 ~]$ mpifrt -v

frt: Fujitsu Fortran Compiler 4.4.0

How to Use: frt [Option] File

# For C Language

[b12345@fx-0001 ~]$ fcc -v

fcc: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: fcc [Option] File

[b12345@fx-0001 ~]$ mpifcc -v

fcc: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: fcc [Option] File

# For C++Language

[b12345@fx-0001 ~]$ FCC -v

FCC: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: FCC [Option] File

[b12345@fx-0001 ~]$ mpiFCC -v

FCC: Fujitsu C/C++ Compiler 4.4.0

simulating gcc version 6.1

How to Use: FCC [Option] FileTypical compile options

| Option Name | Description |

|---|---|

| -Kopenmp | Compile with OpenMP directive enabled. |

| -Kparallel | Enable automatic parallelization. |

| -Kfast | Create object programs that run at high speed on the target machine. -O3 -Keval,fast_matmul,fp_contract,fp_relaxed,fz,ilfunc,mfunc,omitfp,simd_packed_promotion と等価です。 |

| -KA64FX | Indicate the program to output an object file for the A64FX processor. |

| -KSVE | Indicate whether or not to use SVE, an extension of the Armv8-A architecture. |

Please refer to the manual for details on the compiler.

Fujitsu Compiler Manual (Access requires authentication with a supercomputer login account)

Compile natively on fx-0001.

[b12345@fx-0001 ~]$ gfortran --version

GNU Fortran (GCC) 8.3.1 20191121 (Red Hat 8.3.1-5)

Copyright (C) 2018 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

[b12345@fx-0001 ~]$ gcc --version

gcc (GCC) 8.3.1 20191121 (Red Hat 8.3.1-5)

Copyright (C) 2018 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

[b12345@fx-0001 ~]$ g++ --version

g++ (GCC) 8.3.1 20191121 (Red Hat 8.3.1-5)

Copyright (C) 2018 Free Software Foundation, Inc.

This is free software; see the source for copying conditions. There is NO

warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.Please submit your job with the sbatch command. Please note that the writing method of the job script is different from Camphor3 and Laurel3. Camphor3 and Laurel3 customize Slurm to their own specifications, while FX700 uses only standard Slurm features.

There is a debug queue assigned only one node and a short queue assigned 10 nodes. After testing in the debug queue, run the job in the short queue.

$ sinfo -s

PARTITION AVAIL TIMELIMIT NODES(A/I/O/T) NODELIST

debug* up infinite 2/7/2/11 fx-[0002-0012]

short up infinite 2/7/2/11 fx-[0002-0012]| Option | Description | Initial Value | Maximum Value |

|---|---|---|---|

| #SBATCH -p QUEUE | Specify queue name | -- | -- |

| #SBATCH -N NODE | Specify the number of nodes to use | 1 | Trial Use:1 / Small Node Use:8 |

| #SBATCH -n PROCS | Specify the number of processes | 1 | Trial Use:48 / Small Node Use:384 |

| #SBATCH --cpus-per-task=CORES | Specify the number of cores per process | 1 | 48 |

| #SBATCH --mem=MEMORY | Specify the memory size per process | 650M | 31200M |

| #SBATCH -t HOUR:MINUTES:SECONDS | Specify upper limit of the execution time | 1:00:00(1 hour) | 168:0:0(7 days) |

Example 1 of Job Scripts (Sequential jobs with 1 core and 8 GB secured)

#!/bin/bash

#SBATCH -p debug

#SBATCH -N 1 # Number of nodes

#SBATCH -n 8 # Number of processes

#SBATCH --cpus-per-task=1 # Number of cores per process

#SBATCH --mem=8G # Memory size per node

srun ./a.outExample 2 of Job Scripts (OpenMP job (16 threads) with 16 cores and 8 GB secured)

#!/bin/bash

#SBATCH -p debug

#SBATCH -N 1 # Number of nodes

#SBATCH -n 1 # Number of processes

#SBATCH --cpus-per-task=16 # Number of cores per process

#SBATCH --mem=8G # Memory size per node

#SBATCH -t 1:00:00 # Upper limit of elapsed time 1 hour

srun ./a.outExample 3 of Job Scripts ((48 cores, 8GB memory) x MPI job of 2 nodes (96 processes))

#!/usr/bin/bash

#SBATCH -p debug

#SBATCH -N 2 # Number of nodes

#SBATCH -n 96 # Number of processes

#SBATCH --cpus-per-task=1 # Number of cores per process

#SBATCH --mem=30G # Memory size per node

#SBATCH -t 1:00:00 # Upper limit of elapsed time 1 hour

srun ./a.outExample 4 of Job Scripts ((48 cores, 30GB memory) x hybrid job of 2 nodes (24 processes x 4 threads))

#!/bin/bash

#SBATCH -p debug

#SBATCH -N 2 # Number of nodes

#SBATCH -n 24 # Number of processes

#SBATCH --cpus-per-task=4 # Number of cores per process

#SBATCH --mem=30G # Memory size per node

#SBATCH -t 1:00:00 # Upper limit of elapsed time 1 hour

srun ./a.out